Learning Alarm: RL Model

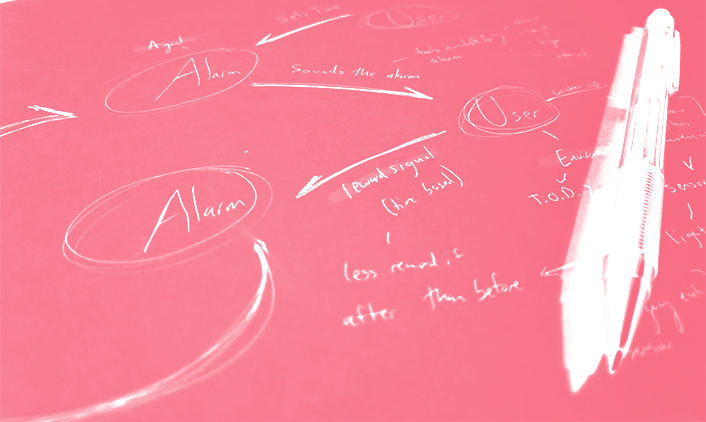

The reinforcement learning problem presented in Learning Alarm is fairly straightforward. Our goal is to learn the best way to wake someone up. Each day, Learning Alarm will try out a new way of waking up the user and will improve itself using reinforcement learning. A reinforcement learning problem can be broken up into a few parts, the agent, the environment, the policy, and the reward function. Below I have outlined each of these for Learning Alarm.

Agent: The agent is the entity that is trying to optimize its behavior for a specific environment. In this case, the agent is the alarm clock and its goal is to try and optimize the best way to wake you up. To keep things simple, I have decided that the alarm can only use audio to wake someone up. It will be able to optimize the following parameters.

- Volume of the alarm

- Type of audio

- How long before the target time to play the alarm (Maximum up to an hour before)

- How many alarms to play before the target time

With these four parameters, the alarm should be able to devise an effective strategy to wake you up. Perhaps in the future I will add a snooze button and other inputs/outputs that the user can interact with.

Environment: The environment is what the agent is acting towards and affecting. The environment is also responsible for handing out the reward to the agent. In our case, the environment is the user that the alarm is trying to wake up. The user is acted upon by the alarm via the sounds that it makes. He/She determines the reward based on how close to the target time that he/she wakes up. Initially, when testing the alarm, I will have the user explicitly tell the alarm when he/she woke up. Assuming all goes well I can eventually integrate sensors to measure light, motion and sound and have the alarm figure out if when the user has actually woken up.

Policy: The policy is what maps the input the agent gets from its environment to the actions it should take. I will be using a neural network which will create and improve upon the policy for the alarm.

Reward: The reward is how the agent asses its performance in the environment. Over time, it will learn the policy that brings it the most reward. In this case, the reward will be determined by how close to the target time the user wakes up. Since waking up after an alarm is generally worse than waking up before, the penalty for waking up after will be double. For example, if the target time is 8:00 am, then waking up at 7:50 will yield a reward of -10 and waking up at 8:10 will yield a reward of -20.

Although there seems to be a lot of moving parts, the reinforcement learning problem is fairly straightforward. We can assume that a person’s sleeping habits are fairly constant and therefore don’t have to aim for a moving target. Also the neural network is updated once a day, when I receive the wake up time from the user and I don’t have to optimize it for speed or a large input of data. Initially, I will not have the alarm be contextual, meaning that the time that the alarm is set to will not influence how the alarm behaves. As I gain more confidence in the system, I can add it as a variable.

The challenge for Learning Alarm will be learning user preference with a relatively small data set. The network will only get the chance to learn once a day, meaning that it will take a long time for the required data before the alarm is effective. My aim is to reduce the training time by exploring other ways that the policy can be updated. To account for anomalies (days when I wake up due to some unexpected circumstance) I will include an override that will not use that day’s data to train the network. Also, I can ask user for initial preference to use as a starting point and evolve the policy from there, or ask for user input periodically to speed up learning. These are just some of the ideas and I’m sure I will have more as I start playing around with Learning Alarm. If you have any thoughts/suggestions, please feel free to let me know below.

Leave a Reply